I will be posting this AGAIN because it was flagged as spam and removed. I thoroughly think it should be considered for the purposes of building a global and stable DLT. I will be waiting for a cordial explanation on why this post was considered:

“Your post was flagged as spam : the community feels it is an advertisement, something that is overly promotional in nature instead of being useful or relevant to the topic as expected.”

The post:

On the Role of Purchasing Power and the CRRA Assumption in the DAP Model

Once again, the proposed economic framework appears detached from purchasing power—a foundational component in any tokenomics model. I’d like to re-emphasize this point, as previously discussed in Polkadot’s Economic Resilience and the Role of Inflation.

1. Purchasing Power: The Missing Anchor

The DAP model is built upon deterministic issuance, consumption smoothing, and Constant Relative Risk Aversion (CRRA) assumptions. But it never grounds these flows in the real value of DOT, i.e., its purchasing power.

What constitutes wealth here? The proposal blurs lines without probabilistic weighting. Consider this breakdown:

| Wealth Type |

Description |

Relation to Purchasing Power |

DAP Handling |

| Coretime/Transaction Revenue |

Protocol income from blockspace and fees |

Scales with adoption; volatile in fiat terms |

Inflows to DAP, but not dynamically modeled |

| Minted DOT Supply |

Seigniorage from issuance (e.g., 55M DOT/year post-2026) |

Dilutive if unbacked; erodes value in bear markets |

Primary funding, smoothed via reserves |

| Productive Value |

Security/blockspace utility |

Emergent from network use; ties to validator ROI |

Implicit in incentives, but static ( r = 0 ) assumption |

Treating “allocation” as “wealth” (with 1:1 equivalence over time) implicitly assumes stable DOT value—yet that assumption itself needs modeling, especially with exogenous shocks (e.g., 50% price drops halving attack costs). Without anchoring to purchasing power, CRRA loses validity for real resilience.

To stress-test: Assign rough probabilities to scenarios—what’s the expected utility under a 30% crash likelihood?

| Scenario (Prob.) |

Purchasing Power Δ |

Reserve Erosion Risk |

Mitigation? |

| Bull (40%) |

+20% |

Low |

Revenue boost |

| Stagnant (40%) |

0% |

Medium |

Baseline smoothing |

| Crash (20%) |

-50% |

High |

Dynamic triggers needed |

2. CRRA and Log Utility: Is It Justified?

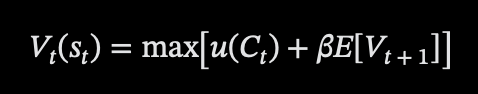

Under CRRA, the model assumes:

with optimal consumption declining ~9% annually. Yet CRRA presumes a behavioral agent optimizing real utility over time. In this case, there is no “agent”—only a deterministic protocol distributing funds to validators, Treasury, and reserves.

Thus, the log form becomes a formal convenience for tractable solutions—not an economic truth grounded in network behaviors like validator exits or capital flight.

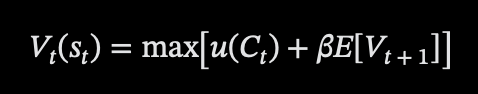

If the goal is resilience or utility, why not consider a convex utility aggregator over dimensions like {security, liquidity, adoption}, derived from actual system metrics? A Bellman equation approach—

—with state variables like staking rate and DOT price could better capture feedback loops over static math.

3. The Discount Factor β ∈ (0,1)

The introduction of a discount factor (e.g., β = 0.91) creates a manual time lever in protocol design, balancing early ecosystem bets with long-term reserves.

In macroeconomics, β reflects human impatience. In Polkadot, it becomes a governance dial—tunable to shift funds across eras, potentially via OpenGov tracks or advisory committees.

This raises centralization risk. Why not derive β from on-chain observables—like staking velocity, validator cost deviation, or adoption metrics? Endogenizing it via dynamic programming would align with actual network signals, avoiding arbitrary tuning.

4. Governance and Allocation Efficiency

If decisions around the incentive shape, β, or stablecoin collateralization remain in the hands of a small advisory committee, how can stakers verify that allocations optimize network utility?

This setup invites governance capture unless it’s anchored in measurable, on-chain metrics such as:

- Validator cost coverage ratios (e.g., vs. $2k baseline)

- Treasury execution efficiency

- DOT purchasing power over time (e.g., fiat-adjusted reserves)

Without transparent dashboards or oracle-derived indicators, efficiency remains speculative. Proposal: Embed KPI oracles for real-time audits?

5. Stablecoin Linkage: Closing the Loop

If DOT-backed stablecoins will be minted by DAP flows (e.g., overcollateralized at 150% for validator and Treasury fiat needs), purchasing power becomes endogenous to protocol solvency.

Key open questions:

- Who ensures reserve adequacy amid volatility?

- How are liquidation risks modeled (e.g., collateral buffers, backstops from strategic reserves)?

- Where is the feedback loop between inflation, volatility, and real obligations?

Leaving the stablecoin outside the optimization loop creates a disconnect between issuance and real-world economic costs—precisely what purchasing power analysis resolves.

Key Questions

Key Questions

- What justifies treating allocation as “wealth” for applying CRRA (especially with ( r = 0 ))?

- Why log utility? Is there a principled rationale, or is it for mathematical simplicity?

- Can time preference (β) emerge endogenously from on-chain behavior instead of being fixed?

- How can stakers evaluate the efficiency of economic choices made by an unelected committee?

- Isn’t purchasing power a required variable in the model’s objective function?

- If backed by a stablecoin, how will liquidity and resilience be maintained—e.g., via overcollateralization, reserve buffers, or automated rebalancing?

The DAP is a mathematically elegant construct, but its optimization remains nominal unless grounded in real value. Without integrating purchasing power and liquidity feedbacks, the model risks optimizing abstractions rather than building true economic resilience.

Let’s iterate—@GehrleinJonas, any thoughts on Bellman simulations or Kusama testbeds to further stress-test these dynamics? Community, what’s your take on endogenous β?

Cheers,

Diego (@DiegoTristain)