A lot of new Polkadot 2.0 technology being developed uses “Core” as a word:

- CoreTime

- CoreJam

- CorePlay

- CoreDA (ok, ok I made this one up, but throughput is linear in the number of CORES…)

“CORE” is an extremely powerful word for permissionless ubiquitous computing because people already use it for personal computing: people (yes YOU) choose one model of personal computer over another because it has a certain number of CORES. There used to be a company called EthCore, founder @gavofyork uses it for the above 2.0 inventions, as well as non-computing cases.

Questions for all you CORES experts:

(1) What exactly is a CORE?

(2) Does Ethereum have just 1 CORE, and how do we know that?

(3) How many CORES does Polkadot have now and how can we measure how many it has later?

(4) How many CORES does { Bitcoin, Ripple, Solana, TON Chain, Avalanche, Cardano, … } have now and how can we measure how many it has later?

All these are mostly the same sort of question as “what is a unit of an inch/horsepower/meter/…” based on, and given how important CORES are to the future of Polkadot marketing, I would like everyone on the same page about this basic unit of measurement. Or, if its just a loose term like horsepower, we should know that.

CLAIM:

(a) If CORES are MEASURABLE, then we can replace the “Polkadot does what Ethereum cannot” with something as understandable as “So, how many cores you got?”

(b) With the above CORES transformation, permissionless computing decisions mirror personal computing decisions

(c) With (b), marketing Polkadot’s complex technology to businesses becomes REALLY easy

I believe the Polkadot founders have consciously or subconciously chosen this CORE word as CORE with this brilliant marketing intuition, and if we can answer the “So, how many cores you got?” industry, Polkadot wins the scalability wars it set out to win at its CORE =)

3 Likes

Actual relevant questions:

- How much computation can be validated under reasonable security assumptions?

- How must does this cost in energy, CPU time, and bandwidth?

I first suggested the term core to Rob H when we were designing the sharding together. At a technical level “core” denotes the index into the bitfield used in availabiltiy voting, so “availability core” would be a more discriptive name. In practice “core” comes up often because backing touches many system functions, including assignment of parablocks to “cores”.

I’m also somewhat responcible for the view that cores represent a performance metric, because I suggested 500 cores on 1000 validators provides a nice “stretch” performance goal. Importantly, our “cores” merely optimize availabiltiy voting, so they themselves use almost no CPU time (under 1% hopefully).

Approval voting occurs once availabiltiy finishes and the parablock leaves its (availability) core. Approvals should consume maybe 80% of CPU time and bandwidth. I designed approval voting to be surprisingly flexible and delay totlerant, so the term “core” fits poorly here. Yet, “cores” provide the fixed size pipe by which parablocks enter approvals, and backing descides if that pipe looks too full.

Anyways…

We do play games like assign two parablocks per core so that nodes verify fewer signed gossip messages. Along with async backing etc, this make core count an artificial performance metric.

My proposed goal of 500 cores on 1000 validators really means that every 6 seconds validators do 1-2 backing checks, and 17ish approvals checks. Aka one parablock check every 0.25 seconds of total CPU time, plus overhead. Assuming 10meg PoV blocks, this is nowhere near bandwidth saturation. We’ll need better parallelism, optimizations, etc in substrate of course, but it’s maybe doable…

As for ethereum…

After elastic scaling works, we should temporarily run solana-style-single-collator EVM chain on Kusama, but with an EVM glutton filling it up, and push up how many cores it can consume using elastic scaling.

4 Likes

@burdges Thanks for providing some detail – but I also just want to know the bottom line from the tech marketers perspective:

-

Cores are a Polkadot-only construct. True or false?

-

It is not reasonable to measure Cores on any other blockchain besides Polkadot. True or false?

-

More cores = more CoreTime. True or false?

-

More cores = more Data Availability. True or false?

-

More cores = more CoreJam. True or false?

-

More cores = more CorePlay. True or false?

-

Polkadot has more cores than all the other blockchains combined. True or false?

Since CORES are an artificial performance metric, is there any kind of objective metric where multiple blockchains can be compared uniformly along your “How much computation can be validated under reasonable security assumptions?” actually relevant question?

Thank you!

@burdges Can you explain how

- How the ~50 cores estimate came to be?

- How the growth in cores to 500-1000 cores can happen?

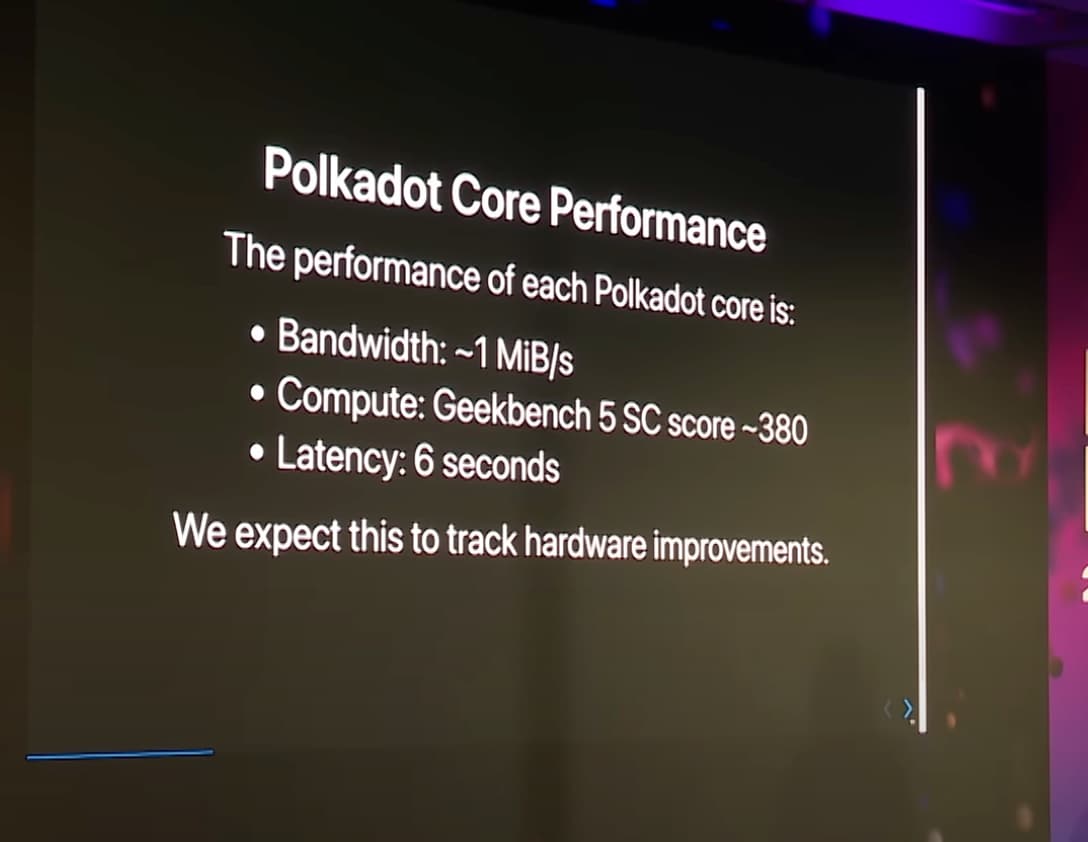

- How the ~1 MiB/s for each core Bandwidth measurement was made?

- How the Geekbench 5 SC ~380 assessment was made?

This is from

Is it possible to argue that Ethereum has 50x fewer cores based on its “bandwidth”?

1 Like